Groq News | Sep 12, 2024

Groq delivers a fast and free LLM API enabling new experiments, according to Justin Miehle...

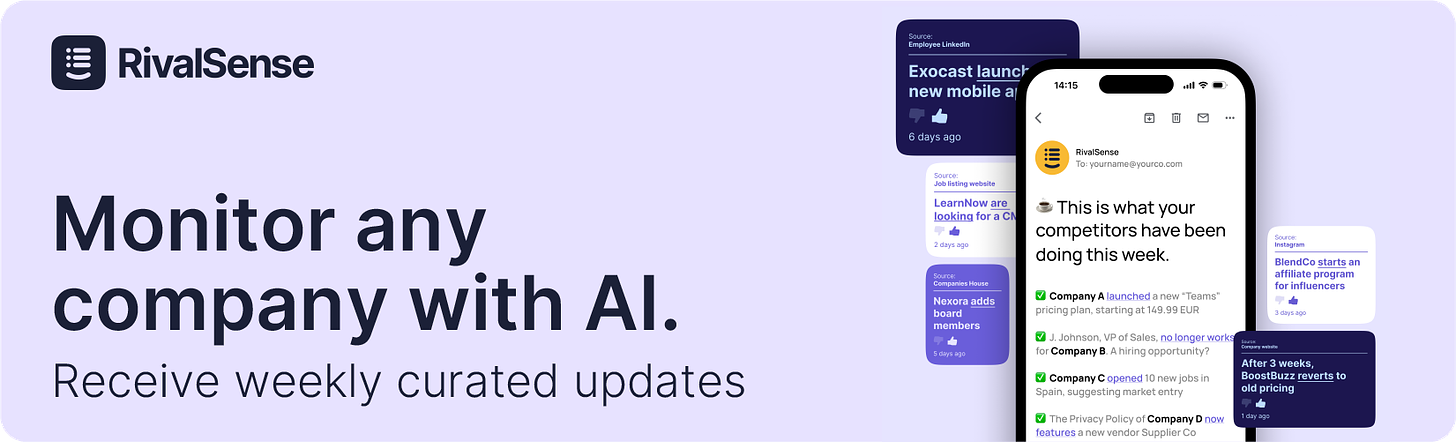

Brought to you by RivalSense - an AI tool for monitoring any company.

RivalSense tracks the most important product launches, fundraising news, partnerships, hiring activities, pricing changes, tech news, vendors, corporate filings, media mentions, and other developments of companies you're following 💡

Groq

🌎 groq.comGroq is the creator of the LPU™ AI Inference Technology for fast, affordable, and energy efficient AI. Headquartered in Silicon Valley, Groq provides cloud and on-prem solutions at scale for AI applications. The LPU and related systems are designed, fabricated, and assembled in North America.

Updates as of Sep 12, 2024

- Groq delivers a fast and free LLM API enabling new experiments, according to Justin Miehle.

- A new website, wordspinner.in, powered by Groq, allows users to save and run frequently used prompts and compare their performance on different text inputs.

- Groq uses custom LPU chips designed around transformers, indicating a shift away from Nvidia/CUDA.

- SambaNova Systems launched a cloud-based AI inference service to rival Groq and Cerebras, claiming the fastest inference speeds in the world.

- Groq and Aramco Digital will start providing a lot of tokens globally later this year and in 2025.

- Groq will offer on-demand services from Saudi Arabia by year-end, providing 20% of its token-as-a-service traffic from the region.

- Groq announced new advancements in unlocking faster inference speed from their 1st gen 14nm chip.

- DigitalEx's new platform helps enterprises manage AI-related costs across multiple platforms, including Groq.

- Groq's LLMs power an AI data analyst that uses E2B's Code Interpreter SDK to securely run AI-generated code and plot linear regression charts from CSV data.

- Scribe for iOS now provides YouTube summaries powered by Groq's Mixtral 7B.

- Groq CEO Jonathan Ross was interviewed on BBC News about the company's technology and future plans.

- Groq CEO Jonathan Ross discussed their development of the largest compute center for AI inference with Aramco Digital on BBC News.

- Pejman Ebrahimi built an app using Groq's LLaVA V1.5 7B multimodal model, Meta's Llama-3.1-70B, and Gradio.

- Groq set a new speed record, according to CEO Jonathan Ross.

- Groq will provide 20% of its on-demand token-as-a-service for AI inference from Saudi Arabia by the end of the year.

- Maximus Hertzfelt deployed a simple LLM chatbot using Groq in less than 5 minutes with Replit Agent.

Did you find it useful?

If you liked this report, consider following your own companies of interest. Receive weekly insights directly to your inbox using RivalSense.